The title of this work is called TransSense: Environmental Interconnectedness. The exploration into this project started by looking into how technology can influence not only how we interact with fellow humans across the world, but also our environment. So much of our lives are dictated by family, friends, acquaintances, our surroundings, etc. How can we extract environmental data to create a sense of calmness to our lives.

Concept + Goals +Audience:

I started this phase of the project by asking myself:

- How can information be translated in real time to the body?

- How can it be sensed through physical output?

How many of us communicate with the world is through cellular devices. When too much time is spent communicating through this form, this can create a disconnect between us and our physical surroundings. The aim of this project is to better integrate one with the world, through a different form, one that doesn’t rely on screens.

So, began to look at different forms of interaction as well as what information can we globally receive from spaces. I have been interested in wearables for sometime and one of the hardest things that I come across when developing wearable devices or garments is justifying the use of the item when the same information can be derived from a phone or other mobile device. However, I find that certain forms of sensation and particular types of information lend themselves to the platform. The sensation being haptics, and the information being weather.

In order to sense a vibration, one must be touching the device which generates the feedback. Due to the nature of clothing, which touches the human body for over 90% of the day, this lends itself to great advantages for intuitive connection. Clothing is also an item that changes based on the weather. If its cold one may wear a sweater. If it rains, a raincoat. If its a hot, a short sleeve shirt. With this project I want to push that function even further. Why not allow for our clothing to “speak” to us about the weather. In this case, I wanted not only to inform about wind patterns, but replicate them.

To this project there is also a poetic side to it. To replicate the wind on the body using information from anywhere in the world may allow one to feel more connected with a particular place in one’s past. For instance, by wearing a garment that can replicate the live wind patterns from my hometown I may be able to establish a mental connection with that location, to fill a dissent void. Of course, this is more poetic in nature, as I mentioned before. If even capable, much more testing and speaking with psychological specialists would be needed.

To clearly state my goals, I want to “shrink” the world for those who are away from the places they care (audience) about by replicating the live wind patterns of that location, in order to provide comfort and a better communicative relationship with our environment which is becoming more cutoff by the use of mobile technologies.

I chose this particular audience because I am one who misses home from time to time, as many do, but can always make it back. I am developing this product as a means for people in the future to cope with being away from a place that they care deeply about.

Precedent:

One precedent that I researched while working on this project was, Rachel Freire. Freire created a wearable called the embodisuit. What this suit does is act as a wearable mesh, worn as an undergarment that would parse information that the user was interested. This suit uses heat, cooling, and haptic feedback to alert the wearer of various forms of changing data, such as the weather and people -to- people communication.

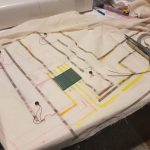

Description of the product:

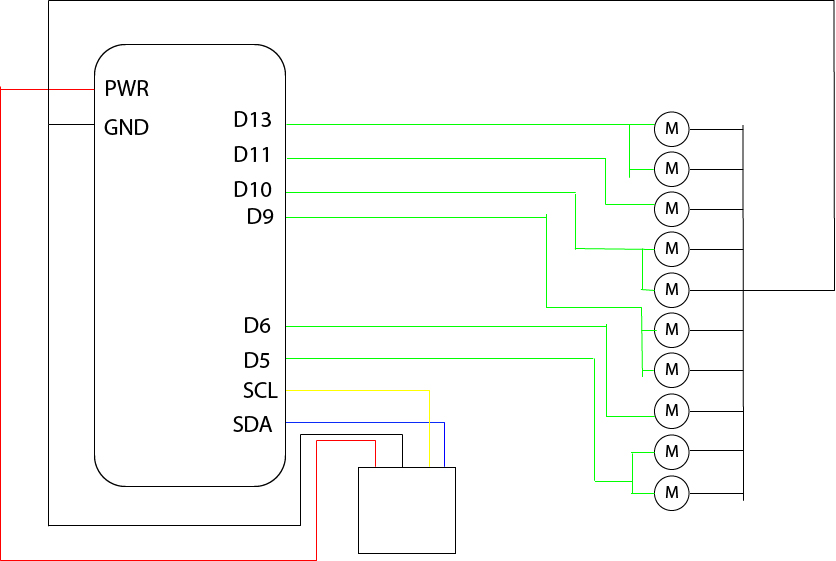

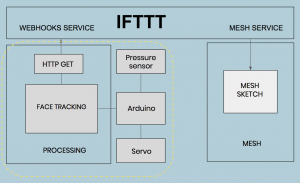

This product is a wearable haptic garment that allows the user to receive update-to-date weather conditions via the internet (IOT). This garment is embedded with 10 vibration motors. Each motor is connected to an Adafruit Feather MO WiFi Microcontroller. This microcontroller, when connected to a nearby accessible WiFi network, will pull the weather data by location from the OpenWeatherMap API. It then parses the information needed, in this case only the wind information, and maps it to the vibration motors. The are two pieces of information being mapped. One, the wind speed is being used to control how much power is given the the motor, and two, the wind direction is used to determine with motors are being activated.

Because this garment is meant to replicate the wind wind it was important to obtain a pulse effect across the body as if it was a gust of wind passing over the wearer. This also meant this varying intensities of power would have to be given to each motor and as stated previously the direction gathered from the API would dictate where on the body this gust would begin. For instance, it the wearer is facing NORTH and the API states the wind is coming from NORTH then the front-facing motors would be given full strength, the motors on the side are given slightly less power, and the motors on the back don’t receive any. How much power each section has is modulated based on the wind speed taken from the API.

In order the make this a dynamic experience, a magnetometer (digital compass) was included initially. This compass would keep track of the user rotation and remap the “pulse” to a different part of the body, determined by the direction from the data set. I will speak to that instance further down.

Video Documentation:

Materials List:

- Fabric + Zipper (any fabric store)

- Conductive Fabric Tape

- Sewable LEDs

- Vibrating Mini Motor Disc

- Sewable Snaps

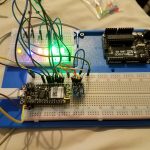

- Adafruit Feather MO WiFi

- Accelerometer + Magnetometer (Compass)

- Perfboard

- Wire

- Arduino IDE

- My Code

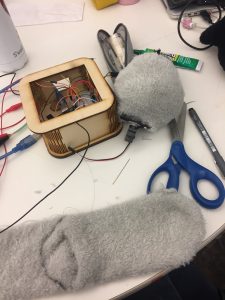

Process + Prototypes:

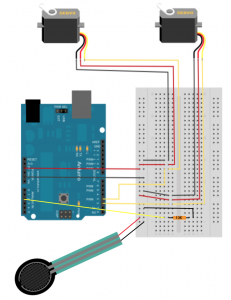

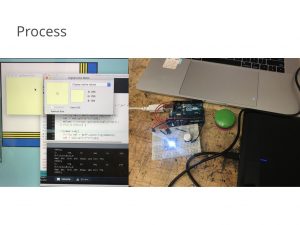

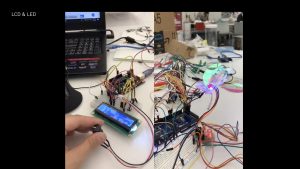

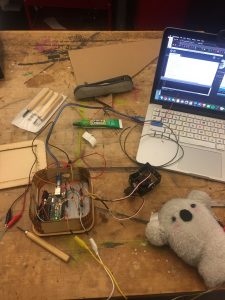

This process started off with coding. Before the making process started I wanted to make sure that I could do it code wise with the electronics I had available. I started off coding the NODEMCU. I found this WiFi developer board quick for connecting to the internet and grabbing information through the API, however, it was tedious when it came to programming the pins. The first thing that I noticed was there were no PWM pins. To create the breeze effect I felt it vital to use PWM. In addition to that the number of pins available to power all of the pins as outputs were limited due to the multiplexing of the pins. When WiFi mode was enabled in the code, several of the the pins stopped outputting. If I tried to connect one pin to another, the board would reset.

At that point I decided to use the Adafruit Feather which was capable of PWM and WiFi connectivity, and without the multiplexing issue. Once I was able to map the API data to the LEDs I was using for testing, I added a digital compass to keep track of which direction the user was standing, as to create a my dynamic experience of feeling the motors react to your rotation. However, the compass was prone to many disruptions, electricity in particular. When I placed it near my computer I found that the readings were quite sporadic and didn’t map well to 360 degree movement. Readings could go from 0 degree to 230 degree with no values reading between. I believe this also has to do with the movement of the human body is faster then the ability of the microcontroller to process. In the end, I decided it would be best to focus my efforts else where, and save the compass for a future iteration.

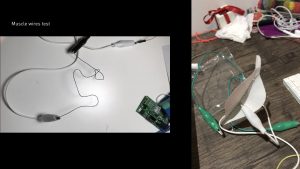

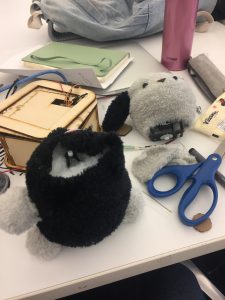

I then began crafting out the circuit and determining the best placement on the body. I tested various fabrics, and conductive materials. The Eeontex Stretch fabric was not conductive enough, so I switched to a conductive fabric tape, connected the vibration motors, sewable LEDs (more so for visual demonstration) and secured it from shortages by placing a layer of insulating material on top of the circuit.

- Vibration Motor Test

- Stretch Test 1

- Stretch Test 2

- Insulation

Circuit Diagram:

Playtesting:

Playtesting: