Project description

I am creating an interactive device to visualize people’s interaction with the online information using the plant as a metaphor. Through interacting with the installation, people can understand how our interaction can influence data and rethink or imagine our possible relationship with data.

I am doing research on Internet information because I want to explore what humans would react if data appears to be a living life form, having the lifespan and biological characteristics and habits, in order to discover a new form of interaction to connect the two and encourage people to rethink their relationship with information.

Precedence

Bionic design

Because the form I will try to redefine information with is by adding the biological characteristic to it and giving it live, I started to look at bionics designs and investigate how design can derive inspiration from nature. Usually, bionics designs imitate the shape and body structure of nature. Bionic Designs that get inspiration from shape and structure creature but some also learn from heir special behavior and their ethnic relations. Studio PSK designed a series of radio devices called “Parasitic Products”[4] that can interfere with electrical equipment, imitating what the parasites to their hosts in the nature. The purpose of

“Parasitic Products” that can interfere with electrical equipment

the design is to highlight the importance of deviance as a way to instigate paradigm shifts in

design. It is a good example of letting people rethink the function of products, and is not always viewed as a heroic discourse, neglecting the aggressive, predatory, and often ruthless lifestyle typical of most organisms. From this project, I discover the possible form to express product in a bionic way and also guide me to think about a way of exploring new function: imitating a kind of biological relationship in nature. I started to imagine the possible biological relationship between data and people base on the reality, like parasitism, symbiosis, saprophyte etc.

The natural metaphor of online information

Then I started to look at social media information which has the closest connection with humans. It helps us to build our public identity and it relies on out input and attention to keep it alive and active. I was inspired from Social Network Zoology done by Chia-Hsuan Chou[5], which compares people’s behavior on social media to animal’s social behavior while doing things like hunting or courtship in order to define your social media personalities as a certain kind of animal. This work tells the story by creating vivid metaphors for human’s behavior on

“Social Network Zoology” compares people’s behavior on social media

the internet, which means to me that metaphor is actually a concise storytelling method. But in my case, the focus is not on people’s social relationship online but the interaction between people and the media. Base on this project, I decided to use visual metaphor to visualize

people’s interaction with social media information. In order to create a narrative based on people’s relationships with social media, I observe what people usually do or react to social information and how these activities impact the information itself.

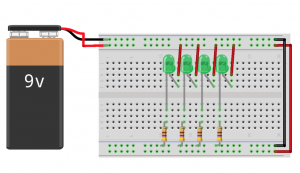

Interaction

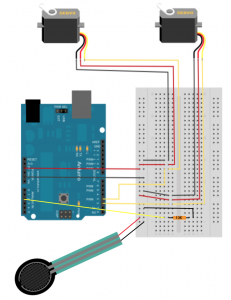

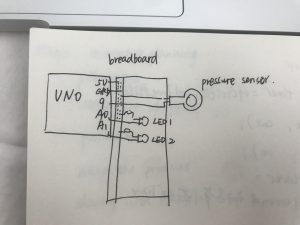

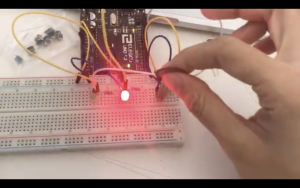

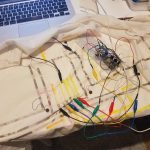

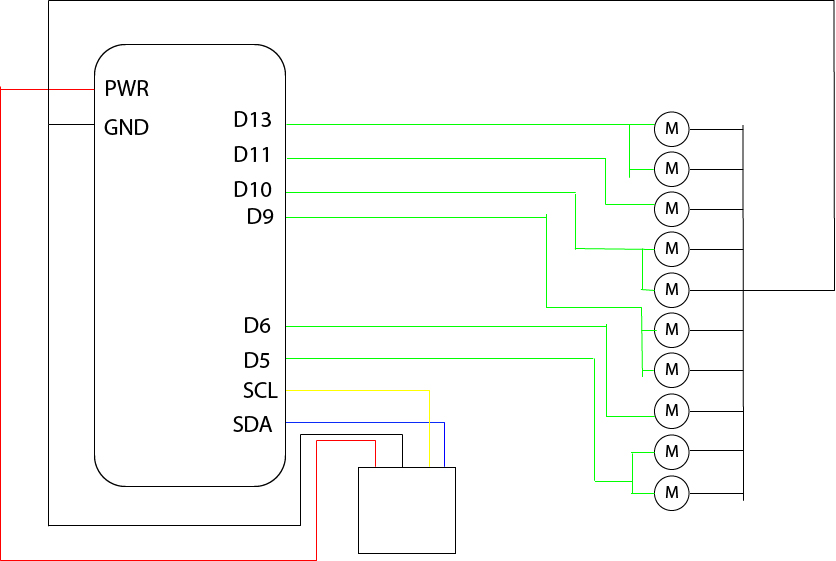

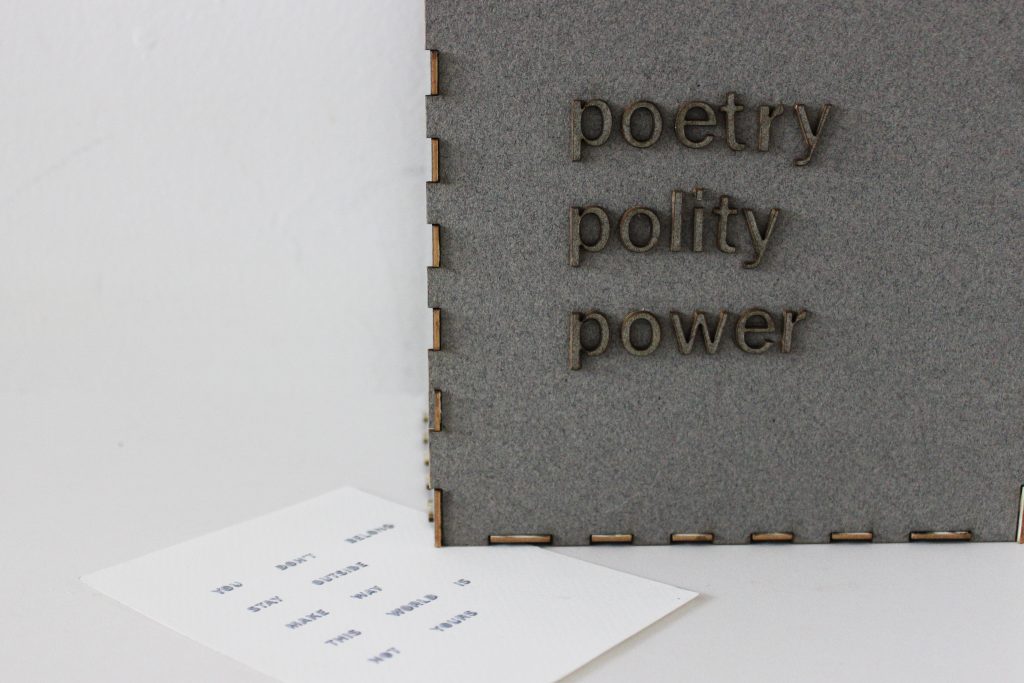

I am planning to make a plant-like product that represents the social media. For example, on Facebook. The posts you made on Facebook would be printed on a leaf. The growing speed of the plants would depend on the interaction activity of people passing by. The usual action we do on social media, like sharing, liking and commenting would respectively

correspond to tearing part of the post. Without people’s attention or interaction, the social media plant would die, which shows the relationship between social media and people. The former relies on the input and attention from people, and the latter needs to build their identity with the public. Also, bringing the virtual action to actual physical ones exaggerates the bounce between the two vividly.

Playtesting:

Playtesting: